Data, Innovation, and the Future of Investment Technology: A Conversation with Neal Pawar

Enfusion COO Neal Pawar discusses innovation, data management, hype cycles, and the evolution of financial technology.

At Clearwater Analytics, we have always been at the forefront of AI innovation. Our journey into Generative AI began long before it became mainstream, giving us a head start in building intelligent systems tailored to the financial domain. To fully appreciate the advanced AI multi-agent system (CWIC Flow) we developed, it is essential to understand the foundation on which it was built — the Transformer architecture. This article explores how transformers revolutionized Natural Language Processing (NLP) and paved the way for AI-driven decision-making at Clearwater Analytics.

Early NLP Approaches were dominated by rule-based systems, which struggled with generalization. The shift to machine learning-based models, such as Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks, introduced improvements in handling sequential data. However, these models faced major limitations, including difficulty capturing long-range dependencies and computational inefficiency due to their sequential processing nature.

The introduction of seq2seq (sequence-to-sequence) architectures, particularly in tasks like machine translation, was a significant step forward. However, these models still struggled with scaling and long-term context retention, leading to the next breakthrough: Transformers.

In 2017, Vaswani et al. introduced the Transformer model in their groundbreaking paper, “Attention Is All You Need.” This architecture eliminated the need for recurrence, instead leveraging self-attention mechanisms to process entire input sequences simultaneously. This shift led to significant advantages:

Transformers became the go-to architecture for NLP tasks due to the following benefits:

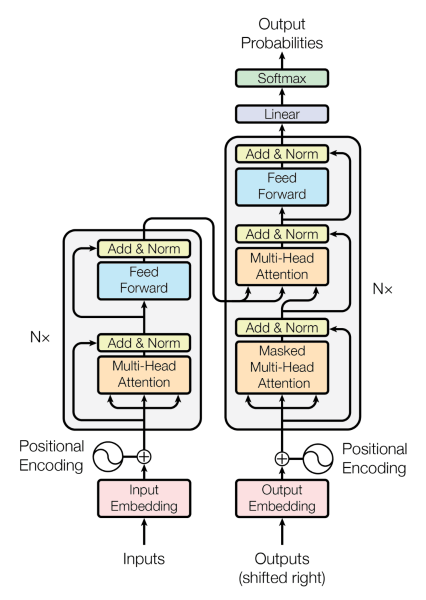

“Attention is All You Need” by Vaswani et al., 2017

“Attention is All You Need” by Vaswani et al., 2017

A Transformer model consists of:

At a high level, a transformer has an Encoder and Decoder architecture. We feed in input text and we get an output text.

The change from the sequential model is how we feed in the data from the encoder to the decoder. It is not a one vector anymore like the seq2seq models.

“Attention is All You Need” by Vaswani et al., 2017

“Attention is All You Need” by Vaswani et al., 2017

If we look closer at the encoder, we will find it starts with text embedding that translates the words into numerical vectors for the model to understand. The embedding will convert each token into a vector. That is why we have to tokenize the input first.

Here’s a detailed explanation of how word embedding works for the sentence “The cat sat on the mat”:

Zoom image will be displayed

1. Tokenization: First, we split the sentence into individual words or tokens: [“The”, “cat”, “sat”, “on”, “the”, “mat”].

2. Word IDs: Each unique word in our vocabulary is assigned a unique ID. For simplicity, let’s say our vocabulary only consists of the words in our sentence. We might assign the IDs as follows: “The” = 0, “cat” = 1, “sat” = 2, “on” = 3, “the” = 4 (note that “the” appears twice but has a different ID), “mat” = 5. In another way, you can think of this as one-hot-encoder

3. Word Embedding Matrix: We have a pre-trained word embedding matrix where each row corresponds to a word’s embedding. The number of columns in this matrix is the dimension of the embedding space (let’s say 4 for simplicity). It might look something like this:

4. Vectorization: To convert the sentence into a sequence of vectors, we look up the embedding vector for each word in the sentence using its ID. For our example sentence, the sequence of vectors would be:

This sequence of vectors can then be fed into the encoder of a transformer model for further processing, such as adding position embeddings and computing attention weights. The goal of these additional steps is to capture the contextual relationships between the words in the sentence.

“Attention is All You Need” by Vaswani et al., 2017

“Attention is All You Need” by Vaswani et al., 2017

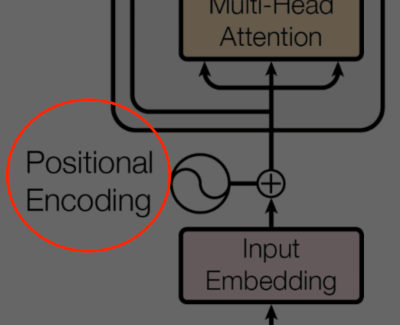

Positional encoding is a concept used in deep learning, specifically in the context of natural language processing (NLP) and sequence modeling. It was introduced to help models understand the order or position of elements in a sequence, such as words in a sentence. This is important because the order of words can significantly change the meaning of a sentence, and traditional models like recurrent neural networks (RNNs) and long short-term memory networks (LSTM) inherently understand sequence order due to their sequential processing nature. However, newer models like the Transformer, introduced in the paper “Attention is All You Need” by Vaswani et al. in 2017, process sequences in parallel, which makes them more efficient but also means they don’t inherently understand the order of elements in a sequence.

To address this, positional encoding is added to the input embeddings of the Transformer model. This encoding is a vector that contains information about the position of each element in the sequence. There are various ways to generate positional encodings, but the original Transformer paper used sine and cosine functions of different frequencies:

Zoom image will be displayed

These functions were chosen because they can generate unique values for each position and have the property that the positional encoding for a particular position can be represented as a linear function of the encodings for other positions, which helps the model learn to attend to relative positions.

Since the introduction of positional encoding in the Transformer model, it has become a standard component in many subsequent models and architectures in NLP, including BERT, GPT, and their variants. Positional encoding is crucial for these models to understand the order of elements in a sequence, which is essential for many NLP tasks like translation, text generation, and sentiment analysis.

In the transformer architecture, the encoder receives input vectors and generates more informative and context-rich output vectors.

Let’s break it down into simple terms:

In summary, the encoder in a transformer takes a sentence, breaks it down into words, represents each word as a list of numbers, adds information about each word’s position in the sentence, and then combines all this information to create a richer, more informative representation of the sentence as a whole.

The concept of attention in the context of neural networks, particularly in natural language processing (NLP), is a mechanism that allows models to focus on specific parts of the input when producing an output. This idea has been particularly influential in the development of the Transformer architecture.

Key Papers:

The success of the Transformer and its attention mechanism has led to the development of numerous models based on this architecture, such as BERT, GPT, and T5, which have significantly advanced the field of NLP.

Let’s take a simple example to understand self-attention in the context of a sentence:

Sentence: “The cat sat on the mat.”

In this sentence, let’s say we want to understand the relationships between the words using self-attention. We can think of self-attention as a way for each word to score its relationship with every other word in the sentence, including itself. These scores indicate how much focus or attention should be given to other words when considering a particular word.

1. Represent Words as Vectors: First, we represent each word as a vector. For simplicity, let’s assume these are 2D vectors:

2. Compute Attention Scores: Next, for each word, we compute a score that represents its relationship with every other word. This is done by taking the dot product of the vectors. For example, the attention score between “cat” and “sat” would be the dot product of their vectors:

3. Normalize Scores: We then normalize these scores so that they sum up to 1 for each word. This ensures that the attention scores can be interpreted as probabilities.

4. Compute Weighted Sum: Finally, for each word, we compute a new vector as the weighted sum of all word vectors, using the attention scores as weights. This new vector can be thought of as a representation of the word that incorporates information from the other words in the sentence based on their relevance.

In our example, the self-attention mechanism allows each word to consider the context provided by the other words in the sentence. For instance, “cat” might have a higher attention score with “sat” and “mat” because they are directly related in the context of this sentence.

This is a very simplified explanation, and in practice, the self-attention mechanism in models like the Transformer involves multiple layers of computation, including separate vectors for queries, keys, and values, as well as multiple heads for capturing different types of relationships. However, the core idea is that self-attention allows the model to dynamically focus on different parts of the input sequence based on the context.

Self-attention calculates attention scores to determine how important each word is relative to others. This mechanism allows transformers to:

Clearwater Analytics has fully embraced transformer-based AI models in CWIC Flow, an advanced Gen AI-driven financial intelligence system. CWIC Flow uses transformers to enhance knowledge awareness, application awareness, and data awareness, providing clients with accurate insights, intuitive guidance, and real-time analytics. For more details, refer to the previous article

Enhance Knowledge Awareness: AI-Powered Financial Expertise

Traditional customer support often requires users to navigate vast documentation to find answers. CWIC Flow’s Retrieval-Augmented Generation (RAG) model, powered by transformers, allows investment professionals to ask questions in natural language and receive precise, context-rich responses with cited sources.

Example: A client asks, “How does Clearwater calculate book yield?” Instead of returning a list of documents, CWIC Flow’s AI retrieves company-specific calculations and methodologies, providing an immediate, authoritative answer .

Enable Application Awareness: Contextual AI for Investment Software

Navigating financial applications can be overwhelming, especially for new users. CWIC Flow’s transformer models integrate with application-specific UI and user profiles to provide instant navigation, guidance, and recommendations.

Example: A user types, “Show me my accounting settings report.” CWIC Flow identifies the correct report, generates a direct one-click access link, and explains its key metrics. This AI-driven application awareness transforms novice users into power users within seconds .

Drive Data Awareness: Real-Time Insights and Anomaly Detection

Clearwater Analytics processes massive financial datasets daily. CWIC Flow’s transformer-based AI models analyze trends, detect anomalies, and provide actionable insights in real-time, ensuring clients stay ahead of risks and opportunities. For more information about using Snowflake in data awareness, you can refer to our previous article.

Example: An investment manager asks, “Are there any cash flow anomalies in the past year?” CWIC Flow automatically scans financial records, identifies outliers, and generates an explanation of potential causes, streamlining decision-making .

While transformers have redefined AI capabilities, Clearwater Analytics is pushing innovation further with multi-agent AI orchestration, fine-tuned models, and real-time AI monitoring. Our commitment to AI excellence ensures that we remain at the cutting edge of financial technology.

While transformers have revolutionized NLP, the next frontier in AI innovation is Vision-Language-Action (VLA) models, exemplified by Google DeepMind’s Robotic Transformer 2 (RT-2). Unlike traditional transformers that focus on text processing, RT-2 extends AI’s capability to interpret images and translate them into real-world actions.

Key Advancements in RT-2 (Transformer 2.0):

Implications for AI at Clearwater Analytics

While RT-2 is designed for robotics, its core principle — combining multimodal understanding with action-based outputs — has strong parallels with AI-driven financial automation. At Clearwater, this could inspire:

Just as transformers redefined NLP, VLA models like RT-2 signal a new era of AI — one that moves beyond language to real-world intelligence.

Transformers revolutionized NLP and set the stage for the sophisticated AI ecosystem at Clearwater Analytics. By understanding the foundations of LLMs, we can better appreciate how they power CWIC Flow, our multi-agent AI system, which redefines how investment data is analyzed and acted upon. As we continue to innovate, transformers remain a key pillar in our mission to provide intelligent, AI-powered financial solutions.

Furthermore, emerging Vision-Language-Action models like RT-2 signal the next wave of AI evolution, where models can not only understand information but also take action based on it. By integrating similar principles into CWIC Flow, Clearwater can stay ahead in AI-driven financial intelligence, ensuring greater automation, deeper insights, and more proactive decision-making in investment management.

Rany ElHousieny is an Engineering Leader at Clearwater Analytics with over 30 years of experience in software development, machine learning, and artificial intelligence. He has held leadership roles at Microsoft for two decades, where he led the NLP team at Microsoft Research and Azure AI, contributing to advancements in AI technologies. At Clearwater, Rany continues to leverage his extensive background to drive innovation in AI, helping teams solve complex challenges while maintaining a collaborative approach to leadership and problem-solving.