Webinar | Smarter management for rated note feeder funds.

Rated Note Feeder Funds (RNFs) offer insurers a flexible way to access fund exposure through both equity and rated debt.

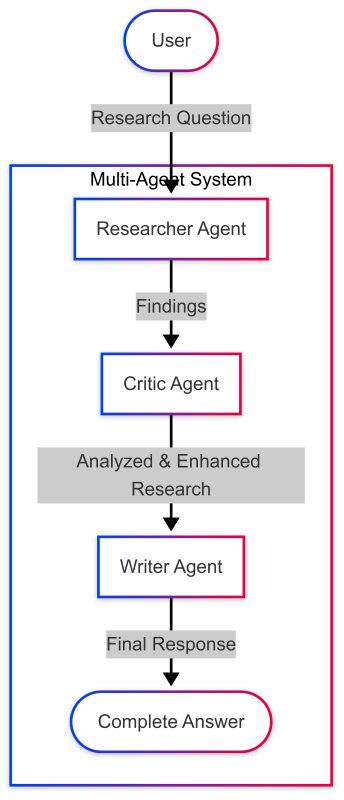

In the previous article, we explored the concept of multi-agent systems and how Clearwater Analytics pioneered this approach with CWIC Flow. We also demonstrated how to build a simple multi-agent system without specialized frameworks. Now, let’s explore LangGraph, a powerful library that provides structured workflows for building sophisticated multi-agent systems.

LangGraph is a library built on top of LangChain that allows you to create stateful, multi-agent applications with Large Language Models (LLMs). It provides a structured way to compose chains into graphs, manage state across multiple LLM calls, and build complex workflows with conditional routing.

Before diving into implementation, let’s understand some key concepts in LangGraph:

This structured approach allows for more complex interactions between agents, including conditional routing, feedback loops, and persistent memory.

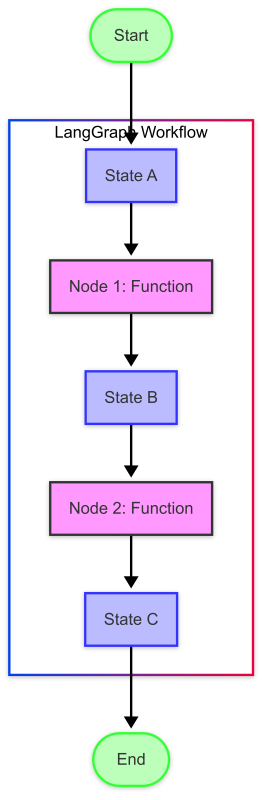

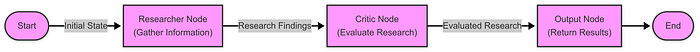

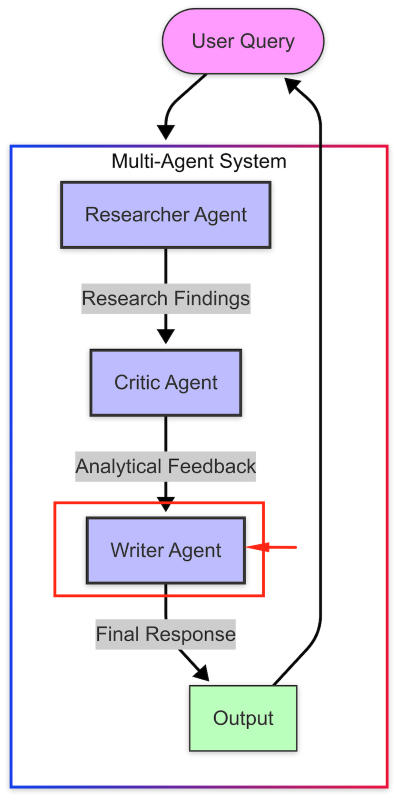

Let’s visualize how a basic LangGraph works:

This diagram illustrates the key concepts:

First, let’s make sure we have all the necessary packages installed. If you’re running this code for the first time, you’ll need to install the required packages.

# Install required packages with latest versions !pip install langchain langchain-openai langgraph python-dotenv

Now, let’s set up our environment variables. We’ll need an API key for OpenAI to use their models. Create a .env file in the same directory as this notebook with your OpenAI API key:

OPENAI_API_KEY=your_api_key_here

# Load environment variables from .env file

import os

from dotenv import load_dotenv

load_dotenv() # Load API keys from .env file

# Verify that the API key is loaded

if os.getenv("OPENAI_API_KEY") is None:

print("Warning: OPENAI_API_KEY not found in environment variables.")

else:

print("OPENAI_API_KEY found in environment variables.")

Now, let’s import the libraries we’ll need for our multi-agent system:

# Import necessary libraries from typing import Dict, List, TypedDict, Annotated, Sequence, Any, Optional, Literal, Union import json # Modern imports for langchain and langgraph from langchain_core.messages import HumanMessage, AIMessage, SystemMessage, BaseMessage from langchain_openai import ChatOpenAI from langgraph.graph import StateGraph, END

Before we dive into multi-agent systems, let’s create a simple helper function to interact with an LLM. This will help us understand the basic building blocks of LangGraph.

def ask_llm(prompt, model="gpt-4o", temperature=0.7):

"""A simple function to get a response from an LLM."""

# Create a ChatOpenAI instance

llm = ChatOpenAI(model=model, temperature=temperature)

# Create a message with the prompt

messages = [HumanMessage(content=prompt)]

# Get a response from the LLM

response = llm.invoke(messages)

# Return the content of the response

return response.content

# Let's test our function

response = ask_llm("What is LangGraph and how does it relate to LangChain?")

print(response)

Response output:

LangGraph is an open-source library designed for building and managing complex applications that use large language models (LLMs). It is a framework that offers tools and components to create, customize, and execute workflows involving LLMs, making it easier to integrate these models into various applications. LangGraph is closely related to LangChain, as it can be thought of as an extension or complementary toolset to LangChain. LangChain is another framework specifically aimed at developing applications with LLMs, focusing on chaining together different components or tasks to create more complex workflows. LangGraph builds upon the principles of LangChain by providing additional functionalities and a more structured way to define and manage LLM-based applications. In essence, while LangChain focuses on the chaining of language model-powered tasks, LangGraph provides a more comprehensive framework that includes graph-based execution flows, enhanced control over tasks, and improved management of the interactions between different components. Together, these tools can be used to streamline the development and deployment of sophisticated applications leveraging the capabilities of LLMs.

Let’s create a very simple graph with just one agent to understand these concepts:

# Define the state type

class SimpleState(TypedDict):

messages: List[BaseMessage] # The conversation history

next: str # Where to go next in the graph

# Define a simple agent node

def simple_agent(state: SimpleState) -> SimpleState:

"""A simple agent that responds to the last message."""

messages = state["messages"]

llm = ChatOpenAI(model="gpt-4o", temperature=0.7)

response = llm.invoke(messages)

return {"messages": messages + [response], "next": "output"}

# Define an output node

def output(state: SimpleState):

"""Return the final state. This marks the end of the workflow."""

return {"messages": state["messages"], "next": END}

# Build the graph

def build_simple_graph():

"""Build a simple LangGraph workflow."""

# Create a new graph

workflow = StateGraph(SimpleState)

# Add nodes

workflow.add_node("agent", simple_agent)

workflow.add_node("output", output)

# Add edges

workflow.add_edge("agent", "output")

# Set the entry point

workflow.set_entry_point("agent")

# Compile the graph

return workflow.compile()

Now, let’s run our simple graph:

# Create the graph

simple_graph = build_simple_graph()

# Initialize the state with a test message

initial_state = {

"messages": [HumanMessage(content="Explain what a multi-agent system is in simple terms.")],

"next": ""

}

# Run the graph

result = simple_graph.invoke(initial_state)

# Print the conversation

for message in result["messages"]:

if isinstance(message, HumanMessage):

print(f"Human: {message.content}")

elif isinstance(message, AIMessage):

print(f"AI: {message.content}")

Output:

Human: Explain what a multi-agent system is in simple terms.

AI: A multi-agent system is a collection of independent “agents” that interact with each other. Think of each agent as an individual with its own ability to make decisions, similar to how people can think and act on their own. These agents work together or compete to achieve specific goals or complete tasks.

In simple terms, imagine a group of robots that clean your house. Each robot is an agent. One might vacuum the floors, another might dust the furniture, and another might take out the trash. They all work independently but communicate and coordinate with each other to make sure the entire house is cleaned efficiently. This is the essence of a multi-agent system: multiple entities working together, often in a coordinated manner, to perform complex tasks or solve problems.

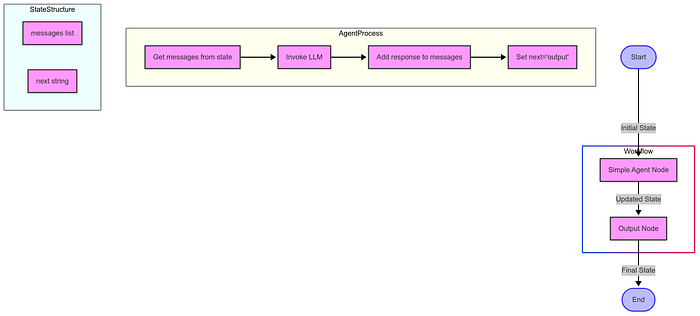

Zoom image will be displayed

This diagram shows:

The workflow starts with an initial state containing messages and next fields, processes it through the Agent node which adds a response using the LLM, then passes it to the Output node which returns the final state, ending the workflow.

Now, let’s understand what just happened:

This simple example illustrates the basic concepts of LangGraph. In real-world scenarios, you can create much more complex graphs with multiple nodes and conditional edges.

In the next step, we’ll build our first specialized agent: the researcher agent. We’ll dive deeper into agent design patterns, learn how to define agent roles and capabilities, and see how to make the agent perform research tasks effectively.

In the previous step, we set up our environment and created a simple graph with a single agent. Now, we’ll build our first specialized agent for our collaborative research assistant system: the researcher agent.

In this step, we’ll focus on building the Researcher Agent — the component responsible for gathering and analyzing information.

A Researcher Agent is an AI system designed to thoroughly investigate topics and provide comprehensive, well-structured information. It serves as the information-gathering component in our multi-agent system with capabilities to:

The latest versions of LangGraph and LangChain offer improved approaches to building agents. Let’s explore how to create a Researcher Agent using these modern tools.

First, we need to import the necessary libraries. Note the modern import paths for LangChain and LangGraph:

from typing import Dict, List, TypedDict, Any, Optional import json import os from dotenv import load_dotenv # Modern imports for langchain and langgraph from langchain_core.messages import HumanMessage, AIMessage, SystemMessage, BaseMessage from langchain_openai import ChatOpenAI from langgraph.graph import StateGraph, END # Load environment variables load_dotenv()

A clear system prompt is crucial for shaping the agent’s behavior:

RESEARCHER_SYSTEM_PROMPT = """ You are a skilled research agent tasked with gathering comprehensive information on a given topic. Your responsibilities include: 1. Analyzing the research query to understand what information is needed 2. Conducting thorough research to collect relevant facts, data, and perspectives 3. Organizing information in a clear, structured format 4. Ensuring accuracy and objectivity in your findings 5. Citing sources or noting where information might need verification 6. Identifying potential gaps in the information Present your findings in a well-structured format with clear sections and bullet points where appropriate. Your goal is to provide comprehensive, accurate, and useful information that fully addresses the research query. """

Now we’ll implement the researcher agent as a function:

def create_researcher_agent(model="gpt-4o", temperature=0.7):

"""Create a researcher agent using the specified LLM."""

# Initialize the model

llm = ChatOpenAI(model=model, temperature=temperature)

def researcher_function(messages):

"""Function that processes messages and returns a response from the researcher agent."""

# Add the system prompt if it's not already there

if not messages or not isinstance(messages[0], SystemMessage) or messages[0].content != RESEARCHER_SYSTEM_PROMPT:

messages = [SystemMessage(content=RESEARCHER_SYSTEM_PROMPT)] + (messages if isinstance(messages, list) else [])

# Get response from the LLM

response = llm.invoke(messages)

return response

return researcher_function

The latest version of LangGraph uses TypedDict for state management, providing better type safety and clearer state structure:

# Define the state type for our research workflow

class ResearchState(TypedDict):

"""Type definition for our research workflow state."""

messages: List[BaseMessage] # The conversation history

query: str # The research query

research: Optional[str] # The research findings

next: Optional[str] # Where to go next in the graph

Next, we implement a node for our LangGraph workflow:

def researcher_node(state: ResearchState) -> ResearchState:

"""A node in our graph that performs research on the query."""

# Get the query from the state

query = state["query"]

# Create a message specifically for the researcher

research_message = HumanMessage(content=f"Please research the following topic thoroughly: {query}")

# Get the researcher agent

researcher = create_researcher_agent()

# Get response from the researcher agent

response = researcher([research_message])

# Update the state with the research findings

new_messages = state["messages"] + [research_message, response]

# Return the updated state

return {

**state,

"messages": new_messages,

"research": response.content,

"next": "output" # In a multi-agent system, this would go to the next agent

}

Now we can build a complete LangGraph workflow:

def build_research_graph():

"""Build a simple research workflow using LangGraph."""

# Create a new graph with our state type

workflow = StateGraph(ResearchState)

# Add nodes

workflow.add_node("researcher", researcher_node)

workflow.add_node("output", output_node)

# Add edges

workflow.add_edge("researcher", "output")

# Set the entry point

workflow.set_entry_point("researcher")

# Compile the graph

return workflow.compile()

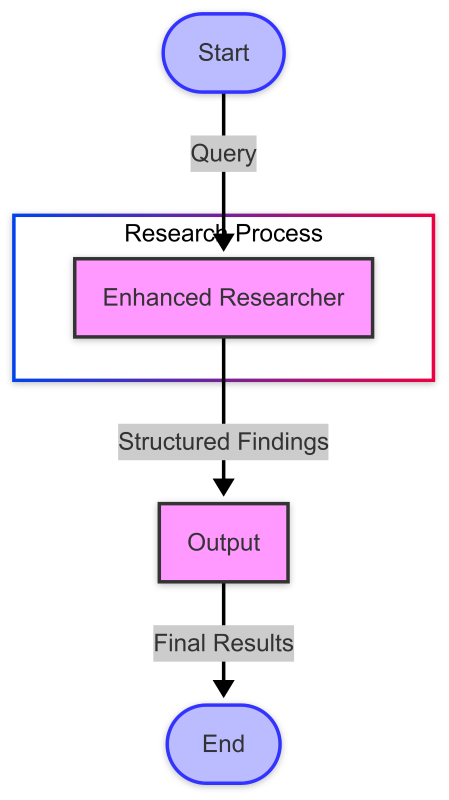

Here’s a visual representation of our workflow:

For better integration with other agents, we can enhance our researcher to provide structured output:

ENHANCED_RESEARCHER_PROMPT = """ You are a skilled research agent tasked with gathering comprehensive information on a given topic. Your responsibilities include: 1. Analyzing the research query to understand what information is needed 2. Conducting thorough research to collect relevant facts, data, and perspectives 3. Organizing information in a clear, structured format 4. Ensuring accuracy and objectivity in your findings 5. Citing sources or noting where information might need verification 6. Identifying potential gaps in the information Present your findings in the following structured format: SUMMARY: A brief overview of your findings (2-3 sentences) KEY POINTS: - Point 1 - Point 2 - Point 3 DETAILED FINDINGS: 1. [Topic Area 1] - Details and explanations - Supporting evidence - Different perspectives if applicable 2. [Topic Area 2] - Details and explanations - Supporting evidence - Different perspectives if applicable GAPS AND LIMITATIONS: - Identify any areas where information might be incomplete - Note any contradictions or areas of debate - Suggest additional research that might be needed Your goal is to provide comprehensive, accurate, and useful information that fully addresses the research query. """

This structured format makes it easier for subsequent agents (like critics and writers) to process and build upon the researcher’s findings.

With our enhanced researcher agent, we can create a more robust workflow:

We implement this workflow with a specialized state type:

class EnhancedResearchState(TypedDict):

"""Enhanced type definition for our research workflow state."""

messages: List[BaseMessage]

query: str

structured_research: Optional[str]

next: Optional[str]

Let’s see how our enhanced researcher agent handles a complex query:

Query: “What are the latest advancements in renewable energy technologies?”

Response (structured format):

SUMMARY: Recent advancements in renewable energy technologies include significant improvements in solar cell efficiency, innovative wind turbine designs, enhanced energy storage solutions, breakthroughs in green hydrogen production, and developments in advanced biofuels.

KEY POINTS:

– Solar PV technology has achieved record efficiency levels with perovskite-silicon tandem cells exceeding 30% efficiency

– Floating offshore wind turbines are expanding deployment possibilities in deeper waters

– Grid-scale battery storage costs have declined dramatically while energy density has increased

– Green hydrogen production via electrolysis has become more efficient and economically viable

– Advanced biofuels from non-food biomass sources are approaching commercial viability

DETAILED FINDINGS:

1. Solar Energy Advancements

— Perovskite solar cells have rapidly improved, with lab efficiencies exceeding 25%

— Perovskite-silicon tandem cells have reached 31.25% efficiency, approaching the theoretical limit

— Bifacial solar panels that capture light from both sides increase energy yield by 5–30%

— Building-integrated photovoltaics (BIPV) have advanced with transparent solar cells for windows

— Agrivoltaics systems that combine farming with solar production have shown promising results

2. Wind Energy Innovations

— Larger turbines with blades exceeding 100 meters in length have increased capacity factors

— Floating offshore wind platforms enable deployment in waters 60+ meters deep

— Airborne wind energy systems (kite and drone-based) are in advanced testing

— Bladeless wind turbines using oscillation technology reduce wildlife impacts and maintenance costs

— Digital twin technology and AI improving predictive maintenance and output optimization

[additional sections omitted for brevity]

GAPS AND LIMITATIONS:

– Long-duration energy storage (10+ hours) remains a significant challenge despite advancements

– Most breakthrough technologies mentioned are still scaling from lab to commercial deployment

– Cost comparisons between emerging technologies often lack standardized metrics

– Environmental impacts of some newer technologies (like certain rare earth mining for magnets) need further study

– Regional differences in technology adoption and policy support create uneven advancement landscapes

The modern approach to building researcher agents with LangGraph offers several advantages:

The researcher agent is just the first step in building a comprehensive multi-agent system. In future articles, we’ll explore:

Building a modern researcher agent with LangGraph provides a powerful foundation for multi-agent systems. By leveraging the latest APIs and structured approaches to agent development, we can create more effective, maintainable, and powerful AI systems that collaborate to solve complex problems.

The researcher agent we’ve built demonstrates how specialized agents can be designed to fulfill specific roles within a larger system — gathering and organizing information that can then be evaluated, refined, and presented by other agents in the workflow.

As we continue to explore multi-agent systems, we’ll see how these specialized components can work together to create AI solutions that surpass what any single model could accomplish alone.

In the previous steps, we set up our environment and created a researcher agent. Now, we’ll add a second specialized agent to our collaborative research assistant system: the critic agent.

A Critic Agent is designed to evaluate, challenge, and improve the work of other agents. Just as human work benefits from peer review and constructive criticism, AI outputs can be significantly enhanced by dedicated evaluation.

The Critic Agent serves critical functions in a multi-agent workflow:

The latest versions of LangGraph and LangChain provide powerful tools for building more robust and maintainable multi-agent systems. Let’s explore how to implement a Critic Agent using these modern frameworks.

Our implementation focuses on three key elements:

The foundation of our Critic Agent is a well-crafted system prompt that establishes its role and responsibilities:

CRITIC_SYSTEM_PROMPT = """ You are a Critic Agent, part of a collaborative research assistant system. Your role is to evaluate and challenge information provided by the Researcher Agent to ensure accuracy, completeness, and objectivity. Your responsibilities include: 1. Analyzing research findings for accuracy, completeness, and potential biases 2. Identifying gaps in the information or logical inconsistencies 3. Asking important questions that might have been overlooked 4. Suggesting improvements or alternative perspectives 5. Ensuring that the final information is balanced and well-rounded Be constructive in your criticism. Your goal is not to dismiss the researcher's work, but to strengthen it. Format your feedback in a clear, organized manner, highlighting specific points that need attention. Remember, your ultimate goal is to ensure that the final research output is of the highest quality possible. """

A significant improvement in recent versions of LangGraph is better state management through TypedDict:

class CollaborativeResearchState(TypedDict):

"""State type for our collaborative research assistant."""

messages: List[BaseMessage] # The conversation history

next: Optional[str] # Where to go next in the graph

This approach provides clear type hints and makes the code more maintainable.

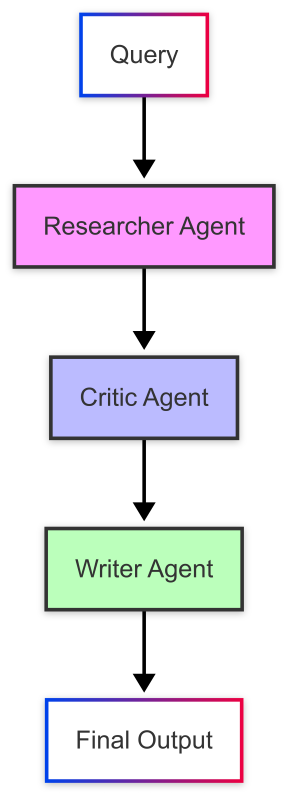

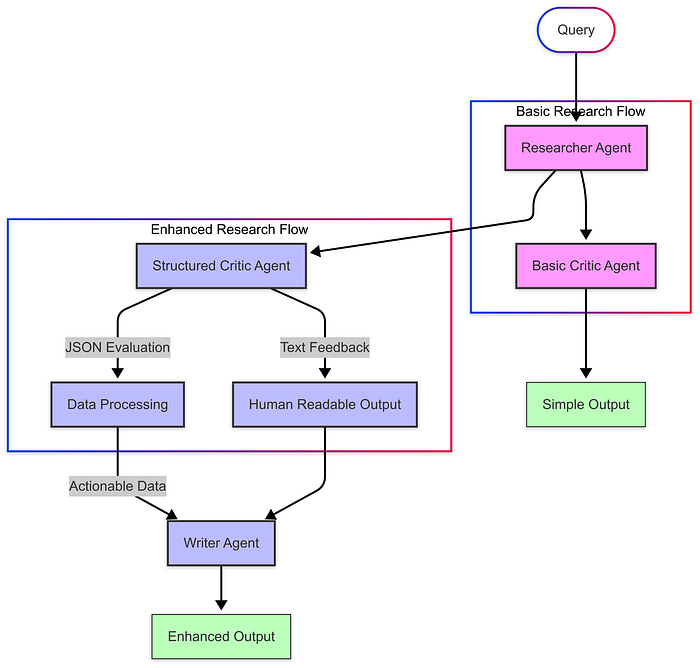

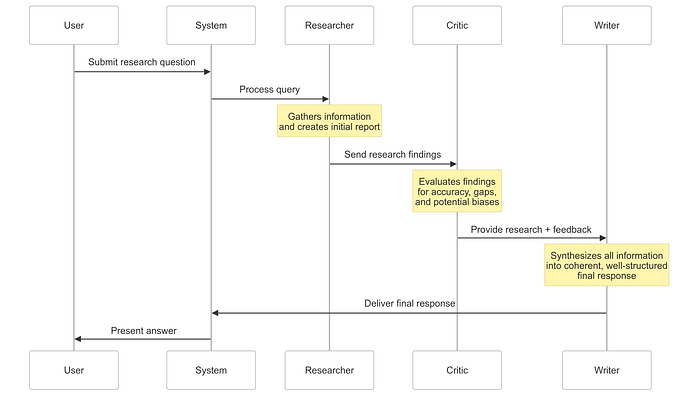

Let’s visualize the basic flow of information between our Researcher and Critic agents:

Zoom image will be displayed

Our LangGraph implementation defines this workflow with modern syntax:

def build_collaborative_research_assistant():

"""Build a collaborative research assistant with researcher and critic agents."""

# Create a new graph with our state type

workflow = StateGraph(CollaborativeResearchState)

# Add nodes

workflow.add_node("researcher", researcher_node)

workflow.add_node("critic", critic_node)

workflow.add_node("output", output_node)

# Add edges

workflow.add_edge("researcher", "critic")

workflow.add_edge("critic", "output")

# Set the entry point

workflow.set_entry_point("researcher")

# Compile the graph

return workflow.compile()

To make our Critic Agent more useful for downstream processes, we can enhance it to provide structured feedback in JSON format. This makes it easier for other agents (like a Writer Agent) to process and incorporate the criticism.

Here’s how we implement structured critique:

class CriticEvaluation(BaseModel):

"""Structured format for critic evaluations."""

quality_score: int = Field(description="Overall quality score from 1-10")

strengths: List[str] = Field(description="Key strengths of the research")

areas_for_improvement: List[str] = Field(description="Areas that need improvement")

missing_information: List[str] = Field(description="Important information that was not included")

bias_assessment: str = Field(description="Assessment of potential biases in the research")

additional_questions: List[str] = Field(description="Questions that should be addressed")

This structured approach allows us to build a more robust workflow:

Zoom image will be displayed

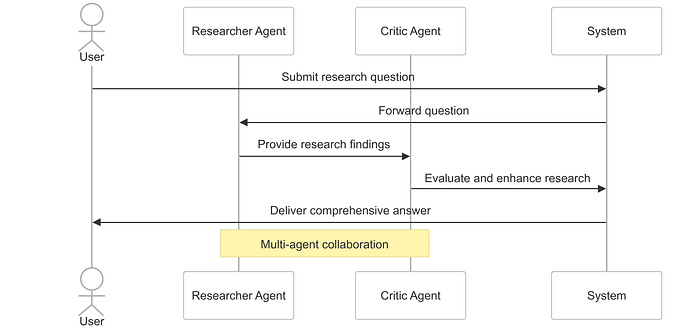

When we put it all together, our multi-agent system follows this process:

Zoom image will be displayed

The modern implementation using the latest LangGraph and LangChain APIs offers several advantages:

By adding a Critic Agent to our multi-agent system, we create a powerful check-and-balance mechanism that improves the quality, accuracy, and completeness of our AI-generated research. This approach mimics human collaborative processes, where peer review and constructive criticism lead to better outcomes.

The structured criticism approach also provides a foundation for the next step in our multi-agent journey: adding a Writer Agent that can synthesize the research and critique into a coherent, well-crafted final response.

In our next section, we’ll explore how to build this Writer Agent and complete our multi-agent research assistant system.

In the previous steps of this article, we explored how to build a collaborative research assistant with specialized agents: a researcher agent to gather information and a critic agent to evaluate and challenge that information. Now, it’s time to add the third essential component: a writer agent that will synthesize this information into a coherent, well-written response. This section explores how to implement a Writer Agent that synthesizes information and produces coherent, comprehensive outputs — creating a complete collaborative research workflow that mirrors human teams of researchers, editors, and writers.

A Writer Agent serves as the final communicator in a multi-agent system, transforming raw research and critical analysis into polished, coherent content optimized for human consumption.

The Writer Agent fulfills several crucial functions in the workflow:

The latest versions of LangGraph and LangChain provide sophisticated tools for building integrated multi-agent systems. Let’s explore how to implement a Writer Agent using these modern frameworks.

The foundation of our Writer Agent is a carefully designed system prompt:

WRITER_SYSTEM_PROMPT = """ You are a Writer Agent, part of a collaborative research assistant system. Your role is to synthesize information from the Researcher Agent and feedback from the Critic Agent into a coherent, well-written response. Your responsibilities include: 1. Analyzing the information provided by the researcher and the feedback from the critic 2. Organizing the information in a logical, easy-to-understand structure 3. Presenting the information in a clear, engaging writing style 4. Balancing different perspectives and ensuring objectivity 5. Creating a final response that is comprehensive, accurate, and well-written Format your response in a clear, organized manner with appropriate headings, paragraphs, and bullet points. Use simple language to explain complex concepts, and provide examples where helpful. Remember, your goal is to create a final response that effectively communicates the information to the user. """

One of the key improvements in recent versions of LangGraph is the use of TypedDict for state management, providing better code organization and type safety:

class CollaborativeResearchState(TypedDict):

"""State type for our collaborative research assistant."""

messages: List[BaseMessage] # The conversation history

next: Optional[str] # Where to go next in the graph

The Writer node implementation follows a clean, functional approach:

def writer_node(state: CollaborativeResearchState) -> CollaborativeResearchState:

"""Node function for the writer agent."""

# Extract messages from the state

messages = state["messages"]

# Create writer messages with the system prompt

writer_messages = [SystemMessage(content=WRITER_SYSTEM_PROMPT)] + messages

# Initialize the LLM with a balance of creativity and accuracy

llm = ChatOpenAI(model="gpt-4o", temperature=0.6)

# Get the writer's response

response = llm.invoke(writer_messages)

# Return the updated state

return {

"messages": messages + [response],

"next": "output"

}

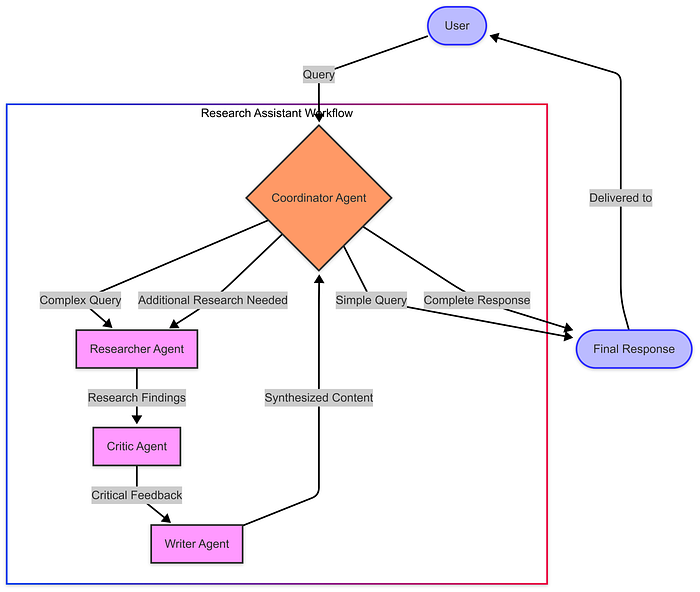

When we integrate the Writer Agent with our Researcher and Critic Agents, we create a sophisticated workflow that mimics a professional research and writing team:

Zoom image will be displayed

The implementation of this workflow in LangGraph is clean and maintainable:

def build_complete_research_assistant():

"""Build a complete research assistant with researcher, critic, and writer agents."""

# Create a new graph with our state type

workflow = StateGraph(CollaborativeResearchState)

# Add nodes

workflow.add_node("researcher", researcher_node)

workflow.add_node("critic", critic_node)

workflow.add_node("writer", writer_node)

workflow.add_node("output", output_node)

# Add edges

workflow.add_edge("researcher", "critic")

workflow.add_edge("critic", "writer")

workflow.add_edge("writer", "output")

# Set the entry point

workflow.set_entry_point("researcher")

# Compile the graph

return workflow.compile()

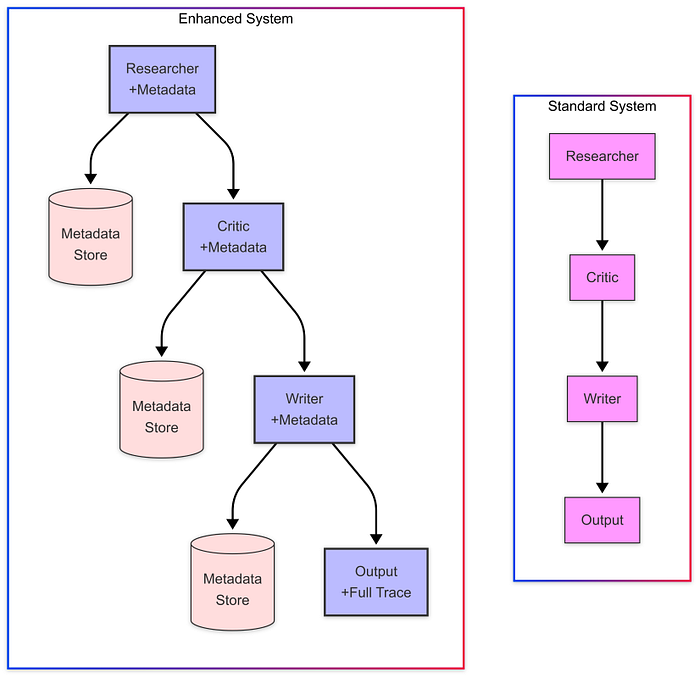

For more complex applications, we can enhance our Writer Agent and overall workflow with advanced features:

We can create an enhanced state type that tracks metadata about each agent’s contribution:

class EnhancedResearchState(TypedDict):

"""Enhanced state type with metadata for the research process."""

messages: List[BaseMessage] # The conversation history

metadata: Dict[str, Any] # Metadata about each step in the process

next: Optional[str] # Where to go next in the graph

This allows us to capture information like processing time, token counts, and model parameters, which is valuable for analysis and optimization:

Zoom image will be displayed

The three-agent structure we’ve built (Researcher → Critic → Writer) can be adapted to various application needs:

1. Hierarchical Organization:

2. Parallel Processing:

3. Hybrid Structures:

Adding a Writer Agent to your multi-agent system provides several key benefits:

By adding a Writer Agent to our system, we’ve completed a powerful collaborative workflow that mirrors how humans work together on complex information tasks. Each agent in our system has a specialized role:

This separation of concerns allows each agent to excel at its specific task while contributing to a cohesive whole. Using modern LangGraph and LangChain features like TypedDict for state management, we’ve built a system that’s not only powerful but also maintainable and extensible.

Multi-agent systems represent a significant advancement in AI application design, moving beyond the limitations of single-agent approaches to create more robust, balanced, and effective solutions.

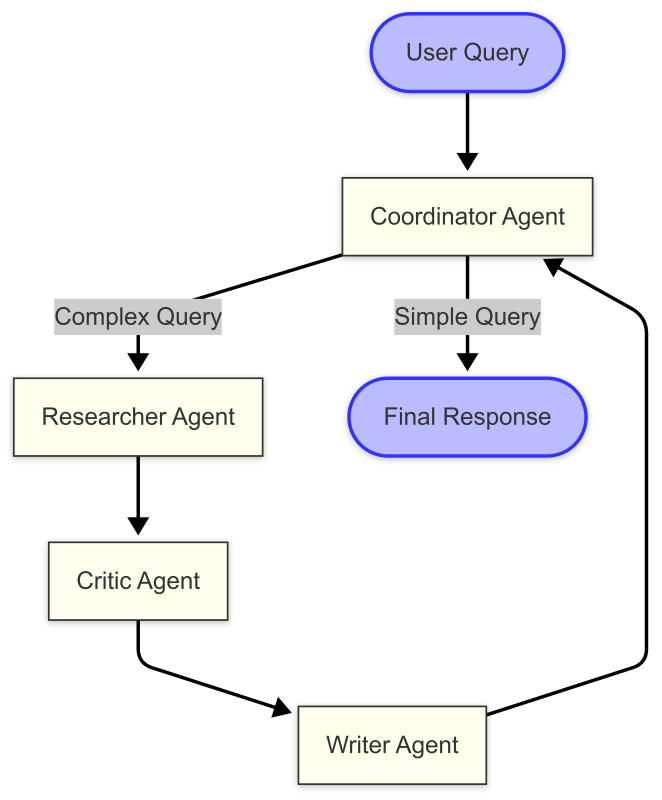

In our journey to build sophisticated multi-agent systems with LangGraph, we’ve explored specialized agents for research, criticism, and writing. Now, let’s focus on the critical component that transforms a fixed, sequential workflow into a truly dynamic, intelligent solution: the coordinator agent.

As multi-agent systems grow in complexity, coordinating the interactions between agents becomes increasingly important. A well-designed coordinator can:

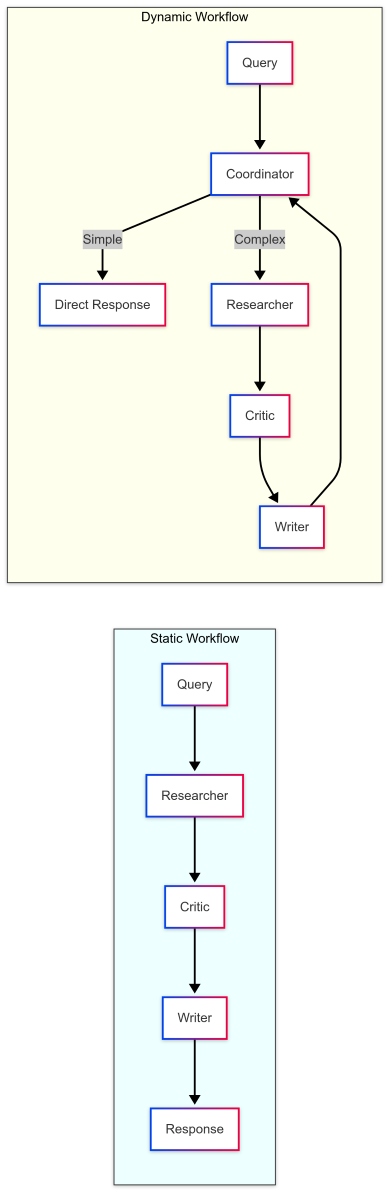

The diagram above illustrates our dynamic multi-agent architecture. Unlike previous implementations where agents operated in a fixed sequence, this system uses a Coordinator Agent to determine the optimal path at each stage. For simple queries, it can bypass research altogether, while for complex ones, it orchestrates the full research cycle.

Modern LangGraph provides powerful tools for implementing this dynamic architecture:

def coordinator_node(state: ResearchState) -> ResearchState:

"""Coordinator node that decides the workflow path."""

# Extract messages from the state

messages = state["messages"]

# Create coordinator messages with the system prompt

coordinator_messages = [SystemMessage(content=COORDINATOR_SYSTEM_PROMPT)] + messages

# Initialize the LLM with a lower temperature for consistent decision-making

llm = ChatOpenAI(model="gpt-4o", temperature=0.2)

# Get the coordinator's response

response = llm.invoke(coordinator_messages)

# Parse the JSON response to determine next steps

try:

decision = json.loads(response.content)

next_step = decision.get("next", "researcher") # Default to researcher if not specified

except Exception:

# If there's an error parsing the JSON, default to the researcher

next_step = "researcher"

# Return the updated state

return {"messages": messages, "next": next_step}

The key improvement in our implementation is the use of conditional edges in the workflow graph:

# Add conditional edges from the coordinator

workflow.add_conditional_edges(

"coordinator",

lambda state: state["next"],

{

"researcher": "researcher",

"done": "output"

}

)

Let’s examine how our Coordinator Agent’s decision-making process works:

Zoom image will be displayed

For simple factual queries, the Coordinator can provide a direct answer, avoiding unnecessary work. For complex topics requiring expertise, it orchestrates a full research cycle involving all specialized agents.

The comparison above highlights the key difference between static and dynamic workflows. The dynamic approach with a Coordinator Agent offers:

Our implementation leverages several modern LangGraph features:

This approach provides a clean, maintainable, and extensible architecture that can be adapted for various applications.

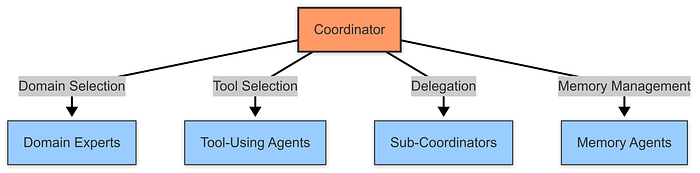

The Coordinator pattern can be extended in several ways:

Zoom image will be displayed

With our coordinator agent implemented, we can now transform our system from a fixed, sequential workflow to a dynamic one where the coordinator decides which path to take:

def build_dynamic_research_assistant():

"""Build a dynamic research assistant with a coordinator agent managing the workflow."""

# Create a new graph

workflow = Graph()

# Add nodes

workflow.add_node("coordinator", coordinator_agent)

workflow.add_node("researcher", researcher_agent)

workflow.add_node("critic", critic_agent)

workflow.add_node("writer", writer_agent)

workflow.add_node("output", output)

# Add conditional edges from the coordinator

workflow.add_conditional_edges(

"coordinator",

lambda state: state["next"],

{

"researcher": "researcher",

"done": "output"

}

)

# Add the rest of the edges

workflow.add_edge("researcher", "critic")

workflow.add_edge("critic", "writer")

workflow.add_edge("writer", "coordinator")

workflow.add_edge("output", END)

# Set the entry point

workflow.set_entry_point("coordinator")

# Compile the graph

return workflow.compile()

This graph creates a dynamic workflow where:

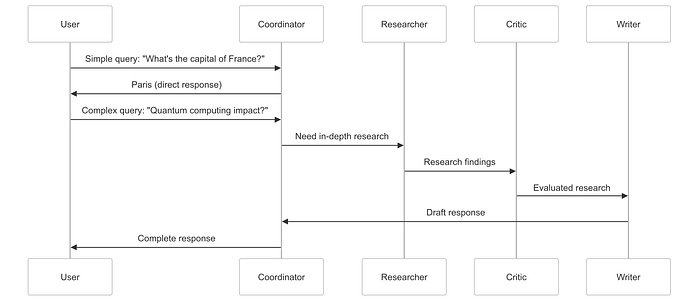

Here’s a visual representation of our dynamic multi-agent system with the coordinator agent:

Zoom image will be displayed

The diagram illustrates the dynamic nature of our system:

Let’s also visualize how different types of queries flow through the system:

Zoom image will be displayed

This diagram illustrates the different paths queries can take:

Let’s see how our dynamic system handles different types of queries:

Query 1: “What is the capital of France?”

Coordinator:

{

"reasoning": "This is a simple factual question asking for the capital of France. The answer is well-known and doesn't require in-depth research, critical analysis, or specialized writing.",

"next": "done"

}

System Response: “The capital of France is Paris.”

Query 2: “What are the implications of quantum computing for cybersecurity?”

Coordinator:

{

"reasoning": "This query asks for complex information about quantum computing and its relationship to cybersecurity. It requires gathering detailed information, evaluating different perspectives, and synthesizing a comprehensive response.",

"next": "researcher"

}

[The system then processes this query through the researcher, critic, and writer agents before returning to the coordinator…]

Writer: [Produces comprehensive, well-structured content about quantum computing’s implications for encryption, security protocols, etc.]

Coordinator (after reviewing the writer’s output):

{

"reasoning": "The query has been thoroughly researched, critiqued, and synthesized into a comprehensive response that addresses the implications of quantum computing for cybersecurity from multiple angles.",

"next": "done"

}

System Response: [The writer’s comprehensive response is delivered to the user]

Adding a coordinator agent transforms our system in several important ways:

Perhaps most importantly, the coordinator makes our system feel more like interacting with a team of intelligent experts rather than a fixed, mechanical process.

While our implementation is powerful, there are several ways to make the coordinator even more sophisticated:

The coordinator agent completes our multi-agent research assistant, transforming it from a sequential workflow into a dynamic, intelligent system. By orchestrating the specialized agents we’ve built — researcher, critic, and writer — the coordinator creates a solution that’s more than the sum of its parts.

This approach mirrors how effective human teams work: specialized experts collaborate under thoughtful coordination to tackle complex problems. The result is a system that can provide simple answers quickly, tackle complex questions thoroughly, and adapt its approach to each unique situation.

As you build your own multi-agent systems with LangGraph, remember that the coordinator is what transforms a collection of agents into a truly intelligent, adaptable system.

Using LangGraph for multi-agent systems provides several advantages:

While LangGraph provides an excellent framework for building multi-agent systems, it’s worth noting the similarities and differences with Clearwater’s CWIC Flow:

LangGraph provides a powerful framework for building sophisticated multi-agent systems, with structured workflows, state management, and conditional routing. While Clearwater Analytics developed CWIC Flow before LangGraph became available, we continue to evaluate emerging technologies like LangGraph to enhance our capabilities.

The multi-agent approach, whether implemented through custom solutions like CWIC Flow or frameworks like LangGraph, represents the future of AI systems. By orchestrating specialized agents with distinct roles and responsibilities, we can create more capable, robust, and adaptable AI systems that deliver greater value to users.

As AI technology evolves, Clearwater remains committed to adopting innovations that enhance our ability to deliver actionable insights to our clients while maintaining our industry-leading standards for security, compliance, and accuracy.

Rany ElHousieny is an Engineering Leader at Clearwater Analytics with over 30 years of experience in software development, machine learning, and artificial intelligence. He has held leadership roles at Microsoft for two decades, where he led the NLP team at Microsoft Research and Azure AI, contributing to advancements in AI technologies. At Clearwater, Rany continues to leverage his extensive background to drive innovation in AI, helping teams solve complex challenges while maintaining a collaborative approach to leadership and problem-solving.